I was recently invited to be a guest lecturer and panelist at a gathering of social sector staff from China, held on the campus of Harvard University. As someone who first lived in China in the late 1990s, and has been connected to China’s non-profit development for over a decade, it was encouraging to be in a room full of pioneers from a country where the existence of the social sector is a very recent phenomenon.

The topic of the panel discussion was the concept of impact in the social sector. The discussion included defining impact and the challenges associated with measuring it. The academic definition of impact was described as the long-term, sustainable change in a population. At Geneva Global, we think of impact in terms of systemic change that results in lasting, positive change for individuals and communities.

Whatever the definition, assessing impact is the sort of thing funders and large-scale non-profits often hire evaluation specialists to do. While formal impact assessments are important to the development and dissemination of good practice, this approach isn’t practical for smaller-budget projects, or for the majority of non-profits, which can be perennially resource constrained.

This was certainly true for the audience our panel was speaking to, and is equally true for the average US non-profit as well. And as many grant managers will attest, evaluation line items can be the hardest to get funded.

Given these realities and the audience for the discussion, the moderator wisely ended the panel discussion with this question: Should smaller NGOs even attempt to measure impact?

The challenges associated with measuring impact is something we work through with our clients every time we design and implement a new granting program. The panel discussion reminded me of the gap I’ve observed with social sector work: there is often a lot of attention paid to tracking the most immediate and easily-measured results of a non-profit’s work (often called outputs), while the long-term (hoped for, but rarely verified) impact is reflected in lofty mission statements.

What’s commonly not given enough consideration is the missing middle ground – beyond immediate results, but short of verifiable, long-term impact. In log frame language, these are referred to as outcomes.

However, it is this ‘missing middle’ which can confirm if a grant is likely to achieve long-term impact, and to ensure that the passionate efforts of non-profit staff, and their funders, are actually contributing to their long-term, impact-oriented goals, without requiring the time and expense associated with formal impact assessments.

Therefore, my response to whether smaller NGOs should measure impact is two-fold: first, don’t let the challenges of measuring ‘impact’ distract you from assessing effectiveness.

Second, don’t forget that the value of assessment is not only in determining if a project was ‘effective’ or ‘impactful’, but also in continuous improvement internally and sharing lessons learned externally.

What follows are some practical and cost-effective suggestions for thinking about, providing space for, and assessing effectiveness.

Be clear about your theory of change

Whether you are a grantmaker or a grantee, developing your theory of change is the first step. Most grantmakers and non-profits have mission and vision statements, short and long-term goals, and the like. But fewer take the time to think through and articulate a theory of change, which describes the problem being addressed, the approach taken to address it, and the expected outcomes.

A clear and thoughtful theory of change allows grantmakers to decide which grants they should place and why. It helps non-profits decide how to invest time and resources, including which grant opportunities to pursue. If granters and grantees want to assess effectiveness, ensuring that grants and programs align with a thoughtful theory of change is the place to start.

Be sure performance indicators go beyond immediate outcomes

For about a decade now, we’ve been running an accelerated learning program designed to get out-of-school children into the formal education system. The program’s long-term goal is to see children complete their education, which existing evidence tells us has lifelong health and well-being benefits at the individual and societal level.

However, this is the ‘too difficult to measure’ end of the spectrum, as it would require costly and time-consuming longitudinal studies beyond the budget or granting timelines of most projects or non-profit organizations. At the ‘easy to measure’ end – we should (and do) capture the number and percentage of students who complete the accelerated learning program.

But stopping there wouldn’t tell us if the program was effective at its stated goal. This we do by measuring not only what percentage of children complete the accelerated learning program (immediate and easiest to measure), but also by following up to see how many of those children then join the formal education system, and how many are still in school one year later. We also gather anecdotal evidence about how they perform against their peers.

Over the past several years through continuous assessment and program refining, we’ve seen over 90% of program graduates continue their education, and have found that these students complete primary school at higher rates than the national average. This relatively low-cost follow up goes beyond the program output (completing the accelerated learning program) to the desired outcome (continuing education). And it provides an affordable proxy indicator for the lifelong impact we hope to see but aren’t able to measure directly.

Create space for achieving greater effectiveness through multi-year funding commitments

The concept of patient capital is well established in the for-profit sector, but underdeveloped in the non-profit space, to the determent of all stakeholders. Many grants are short-term – for a year or less – and there can be good reasons for that. However, there often are better reasons to offer longer-term funding.

Funding a project to expand the reach of an organization or to pilot something new, for example, requires multi-year funding to achieve the desired effect. In 2007, Geneva Global, working with the Legatum Foundation, moved from single to multi-year grantmaking approach. The model, which we called Strategic Initiatives, committed multi-year granting, typically focusing on a specific issue in a specific geography – reducing infant and maternal mortality in rural communities in India’s Bihar state – for example.

A couple of years after moving to the multi-year model, when we reviewed results from the over 20 programs on four continents, we found that performance against benchmarks had increased by greater than 10%, compared to single year grants. We saw even greater increases over time.

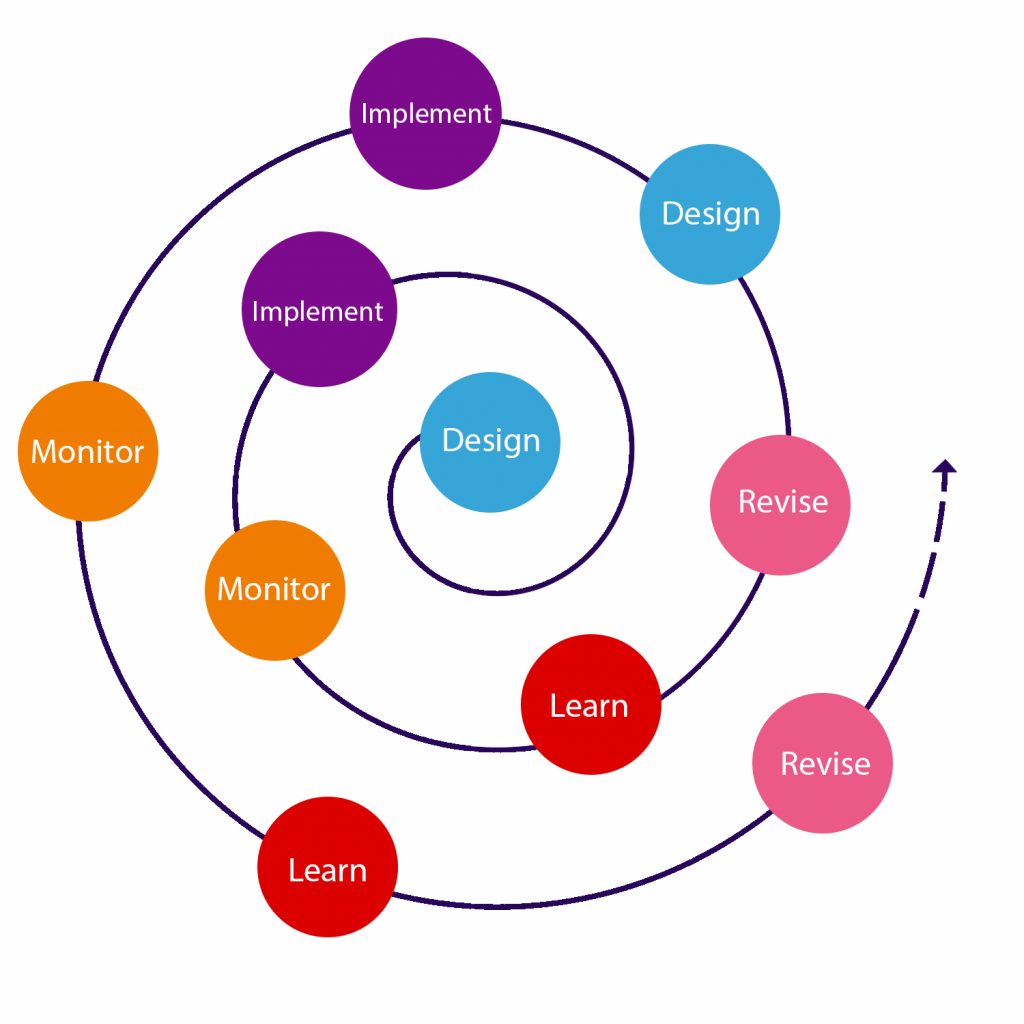

Even so, we recognize that lasting impact that will move the needle takes time. As a result, we’ve recently been piloting a mixed-funding model, with a ten-year time horizon. Allowing organizations and granting programs multi-year funding provides space and time for the ‘learning loop’ – implement, learn, revise/innovate/improve – a process that is more likely to lead to lasting impact than shorter-term grant making does.

Set realistic goals that allow time to realize desired results

Building on the concept of a learning loop mentioned above, the majority of challenges – and lessons learned for program improvement – are likely to be learned in the first year. This is especially true where a grant takes a program from concept to implementation, or attempts to scale a proven model. Setting realistic indicators for the first year builds in this reality; failure to do so can set unrealistic expectations that, if not realized, can jeopardize funding beyond a program’s inception year.

An example of this was our program designed to reduce infant and maternal mortality rates (IMR/MMR) in rural Bihar, India. The program was addressing multiple factors that contributed to high IMR/MMR rates – changes in unsafe birthing practices, lack of antenatal care, lack of trained birth attendants, lack of access to childhood immunizations, and more – and progress required time. The program’s benchmarks for progress reflected this, suggesting greater IMR/MMR reductions over time.

By the end of the first year, we had seen significant reductions, but by the third year, once the program activities had resulted in improvements across various contributing factors, many villages had seen rates drop to near zero. The outcomes exceeded our expectations. By setting conservative expectations for the first year and scaling up the hoped-for outcomes each following year, we gave all stakeholders – the program funder, the local NGOs, and ourselves as program managers – the time and space required to see the kind of change we’d hoped for.

Encourage and reward honest reporting that goes beyond numbers and stats

Especially in international grantmaking, the fact is that the vast majority of grantmakers can’t visit and verify all of the results their grantees report. As a result, many funders rely largely on self-reporting from grantees. When reporting templates focus exclusively on results indicators (such as a percent reduction in IMR/MMR, or the number of children completing an accelerated learning program), and especially if there are punitive reactions for ‘underperformance’, grant makers are likely to discourage honest reporting.

In addition, reporting that focuses mainly on performance indicators means that grantmakers are likely to miss equally meaningful information about the program – what challenges were faced, what lessons learned, what course corrections were needed.

For funders, communicating from the beginning that you are interested in these things, as well as in the program metrics, creates space for open communication that can benefit all stakeholders. Grantee organizations would be well served to seek out funders who show an interest in the process as well as the results.

At Geneva Global, our grant management model includes on-the-ground staff and consultants who regularly visit grantee’s work, so we don’t face the verification obstacle that some do. Even so, our reporting templates encourage grantees to share qualitative and quantitative information, including challenges and lessons learned.

We are a very results-oriented organization, so I’m not suggesting that grantees shouldn’t be held accountable. But we also recognize that working with local organizations in underdeveloped and often remote contexts means that underperformance can be a symptom of capacity challenges.

We look for grantee organizations that have a learning attitude, and seek to pursue continuous improvement. When grantmakers invest in these organizations with patient capital, they will almost always get greater than expected results in the long-term, and leave behind better equipped local organizations when funding is concluded.

Don’t forget the multiple purposes of assessment

It’s easy to fall into the trap of thinking that the purpose of an assessment is answering the question: did this program work? After all, it’s a question worth asking, and it is often what boards of foundations and non-profits want to know.

But we shouldn’t forget that the significant value of an assessment comes from learning lessons that provide non-profits with the feedback needed for continuous program improvement and, if shared, can benefit others working in the same space.

If all assessments shared these aims, then the speed of continuous improvement would accelerate, money and effort would be saved, and all stakeholders – funders, grantees, and communities — would enjoy greater results on a shorter timescale.

Assessing effectiveness doesn’t have to require hiring additional staff, engaging a specialist, or collecting data over several years. It’s about avoiding the temptation to only focus on immediate results. It’s about ensuring that the activities and approaches an organization is taking are having the desired effect. It’s about knowing that your efforts are contributing to your organization’s aspirational mission statement.